What is AWS PrivateLink?

In a growing organization with multiple software objectives, it becomes more common to use a hybrid network with many virtual private clouds (VPCs). We, therefore, need a service that can combine these clouds for a more integrated approach.

AWS PrivateLink, as the name suggests, is a way to connect your VPCs with AWS services and on-premises networks privately without exposing the involved traffic to the internet. What makes AWS PrivateLink special is that it brings together two powerful concepts:

-

Virtual Private Cloud (VPC)

-

Software as a Service (SaaS)

With AWS PrivateLink, one can connect their services across multiple accounts and VPCs while maintaining a much simpler network architecture. Now that we know what AWS PrivateLink is, let’s see how to use one.

How to use AWS PrivateLink?

In order to use an AWS PrivateLink, we first need to create a service endpoint in our VPC. There are three types of VPC endpoints -

-

Gateway Endpoint

-

Interface Endpoint

-

Gateway Load Balancer Endpoint

The type of VPC endpoint depends on, of course, the service we’re trying to connect to and its supported configuration. Once a VPC endpoint for a service that supports AWS PrivateLink is in place, a virtual interface known as an elastic network interface will be created with a private IP address. This IP address will now serve as an entry point for the traffic intended for the service.

PrivateLink supports three kinds of services:

-

AWS Services

-

Customer hosted internal services

-

Third-party services (SaaS)

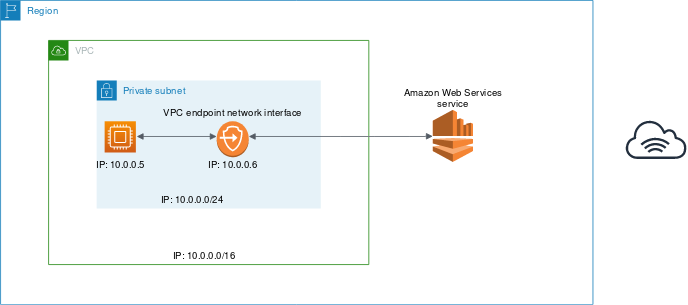

The following diagram depicts the whole process of connecting to an AWS service via a VPC endpoint:

Image Source: AWS Documentation

Why do you need AWS PrivateLink?

1. Security

Since AWS PrivateLink does not expose the network traffic to the internet, it also reduces the risk of other threats such as brute force or denial-of-service attacks. Additionally, AWS PrivateLink gives you an option to define a VPC endpoint policy with which you can control the access of a particular service.

2. Simpler Network Architecture

AWS PrivateLink simplifies your network architecture by providing an option to connect to multiple accounts with no firewall rules or route tables. This implies that AWS services can be shared across different VPCs without the need for an internet gateway or supernetting. Therefore, AWS PrivateLink supports a simpler network architecture and makes it easier to manage the network globally.

3. Cloud Migration made easy

Now that you don’t have to worry about the vulnerability of your network as your data is private, cloud migration becomes much more secure and easier! With AWS PrivateLink, you can migrate to applications hosted in the cloud and get the best of both worlds: utilizing the cloud services without traversing the internet.

AWS PrivateLink Use-Cases

1. Private Access to SaaS applications

In a typical scenario, a service (SaaS) provider installs an agent or a client in customers’ VPCs for analytical purposes. This was a necessary step to send the data back to the provider. However, with AWS PrivateLink, SaaS service providers can now build secure and scalable applications on AWS and provide these services to a number of customers privately.

Here’s how: With the help of a Network Load Balancer, a SaaS provider can target instances in their VPCs that can be represented as an endpoint service. Intended customers can then be granted access to these endpoint services. A customer can now create an interface endpoint in their own VPC and start using these services. Since there are only two parties involved in this relationship - the service provider and the customer, there is no risk of external attacks or unwanted communications.

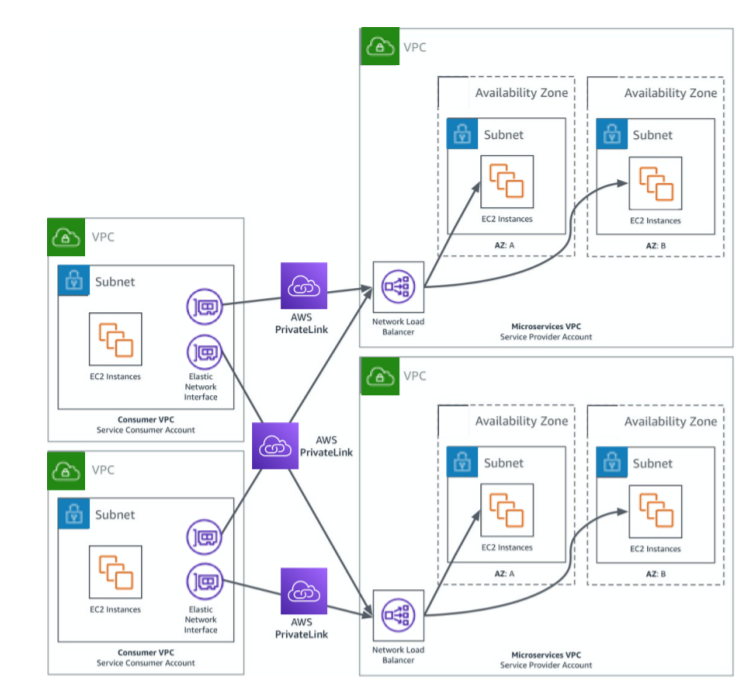

2. Microservices

A microservice architecture organizes an application into loosely coupled services whose aim is to complete a specific task. This kind of environment goes hand in hand with PrivateLink. One can deploy their service in a VPC and create a service endpoint when it is ready to be used. The end-user can then request access to the endpoint and create an interface endpoint in their own VPC just like in the previous use-case.

The diagram below illustrates the process of multiple service providers communication via PrivateLink -

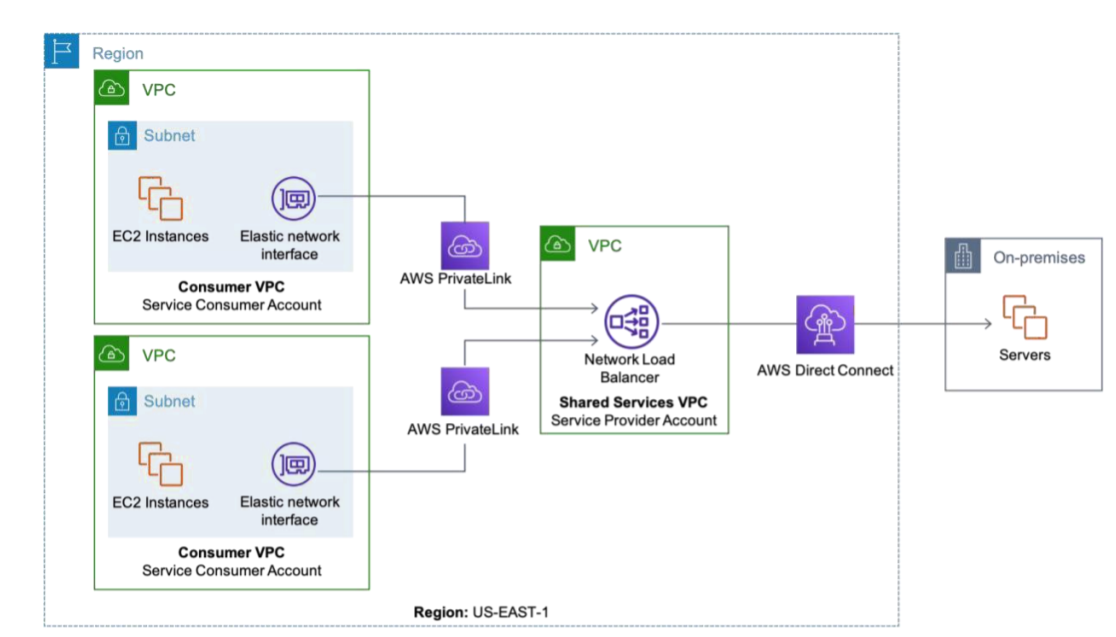

3. Shared services

There will be some common dependencies such as security, authentication, etc. among services in the workloads deployed on AWS. These services can be abstracted in a separate VPC to reduce redundancy. These services can now be shared with the other workloads which, again, have their own VPC. Since with PrivateLink, every service can be shared with the help of VPC endpoints, this approach gives room to a more structured architecture and also helps in avoiding inconsistencies.

4. Hybrid Cloud

While you are in the middle of migration, you would still need to use the AWS services to serve your client needs. The Network load balancer for AWS PrivateLink helps to load workloads from both AWS and on-premises resources which makes migration much easier. Once the migration is complete, on-premise targets will be replaced by AWS instances which are explained in the diagram below:

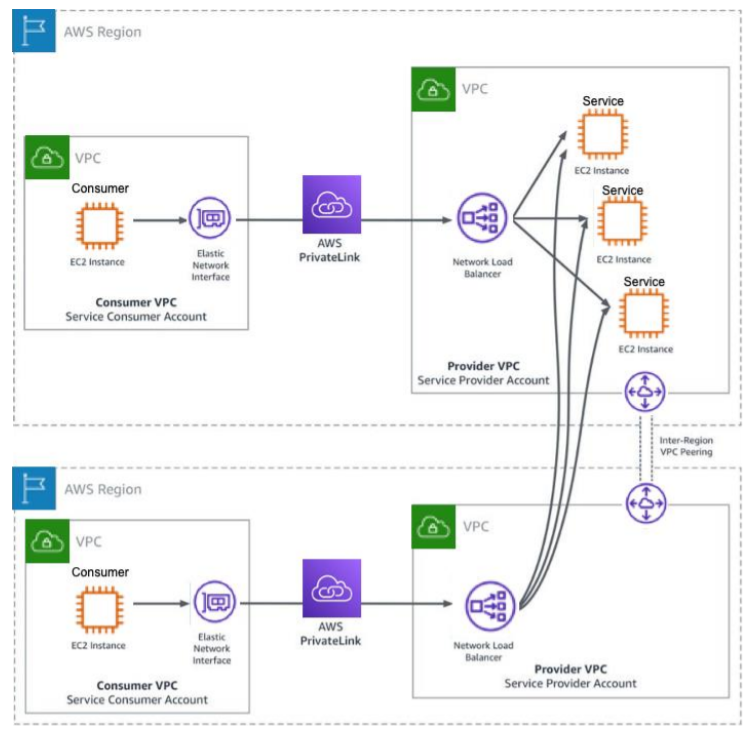

5. Inter-Region connectivity

Service providers can extend their services to other regions with the help of Inter-Region VPC Peering. VPC peering refers to a network connection between two VPCs where you can route traffic between them with the help of private IP addresses. Similarly, Inter-region VPC peering ensures that the hosted services communicate through the PrivateLink endpoint in the remote region.

This approach not only helps in safeguarding the traffic but also gives the SaaS provider the flexibility to deploy the services in the remote region when they’re ready. However, this will incur some latency and additional cost as you’re communicating with a remote region. Nonetheless, VPC peering is a great alternative to immediate service provision.

The figure demonstrates inter-region connectivity between US-East-1 and US-East-2 regions.

Best Practices

1. Choosing a DNS strategy

There are several options available for DNS based on your architecture such as:

a. Private DNS - automatically creates a zonal-specific or region-specific private DNS. The DNS name includes VPC endpoint ID, Availability Zone and the region name. A sample DNS in this category looks like -

vpce-1234-abcdev-us-east-1.vpce-svc-123345.us-east-1.vpce.amazonaws.com

b. Public DNS - includes AWS assigned public DNS names such as

ec2-public-ipv4-address.compute-1.amazonaws.com

c. On-premise DNS server

2. Considering your location

Since PrivateLink data operates on a zonal basis, customers often create a zonal-specific DNS name for each zone where the endpoint services are available. The hostname has the availability zone in its name, for instance -

vpce-0fe5b17a0707d6abc-29p5708s-us-east-1a.ec2.us-east1.vpce.amazonaws.com.

When a service is deployed in more than one availability zone, AWS also supports an option of cross-zone load balancing. With cross-zone load balancing, requests are uniformly distributed across all registered instances in enabled availability zones.

3. Managing endpoint acceptance

Once you create an endpoint for your service, you can add permissions to make the service accessible. You can also specify that acceptance is required for incoming connection requests. If this option is enabled, you must manually accept or reject the connection requests. This can be done via AWS Console, Command Line Interface (CLI) or the API. The approach of endpoint acceptance, thus, provides a layer of security, especially when sensitive data is involved.

4. Whitelisting principal

Whitelisting is the process of allowing only a limited number of people to access a service that might be sensitive to your business. While Blacklisting follows the same approach, whitelisting does it in a more secure way as it completely bans everything and only lets a selected few in.

Whitelisting in AWS can be achieved through a number of ways such as the Principal element. The Principal element is used to allow or deny access to a resource. It is used in a policy that can be attached to a resource (also known as resource-based policy) such as an S3 bucket. With this element, you can allow IAM roles, IAM users, AWS accounts, etc. to access your service via VPC endpoints.

To read more about how to secure your software supply chain and avoid unwanted attacks, visit here.

How Packagecloud can help with SaaS?

Packagecloud is a cloud-based service for distributing different software packages in a unified, reliable, and scalable manner, without any external dependencies. With Packagecloud, you can keep all the packages that need to be distributed across your organization in one repository, regardless of the Operating System or the programming language. Packagecloud also helps in distributing these packages in a secure way, without having to own any of the involved infrastructures.

This enables users to save time and money on setting up servers for hosting packages pertaining to every Operating System. Packagecloud allows users to set up and update machines faster with less overhead than ever before.

Sign up for the packagecloud free trial to get your machines set up and updated easily!